Most software engineers, or anyone who’s ever revisited any older code (or for that matter, text), that they’ve written, is familiar with that moment of disgust, that passionate search for the perpetrator of such atrocities, and the guilt-ridden self-loathing you experience when you realize that perpetrator is you.

Look on my Works, ye Mighty, and despair! - Past me to Present me

Being well acquainted with both self-loathing and writing bad code, you’d assume that I’m pretty well inured to any new revelations regarding my utter incompetence. Well, let me regale you with a story. About eight score and seven (technically only “and two”) weeks ago, I thought I had to make a statement to our engineering department that if we were going to adopt Terraform as our IaC (Infrastructure-as-Code1) tool, we should invest in developing reusable Terraform modules (a concept I was introduced to by the works of Yevgeniy Brikman).

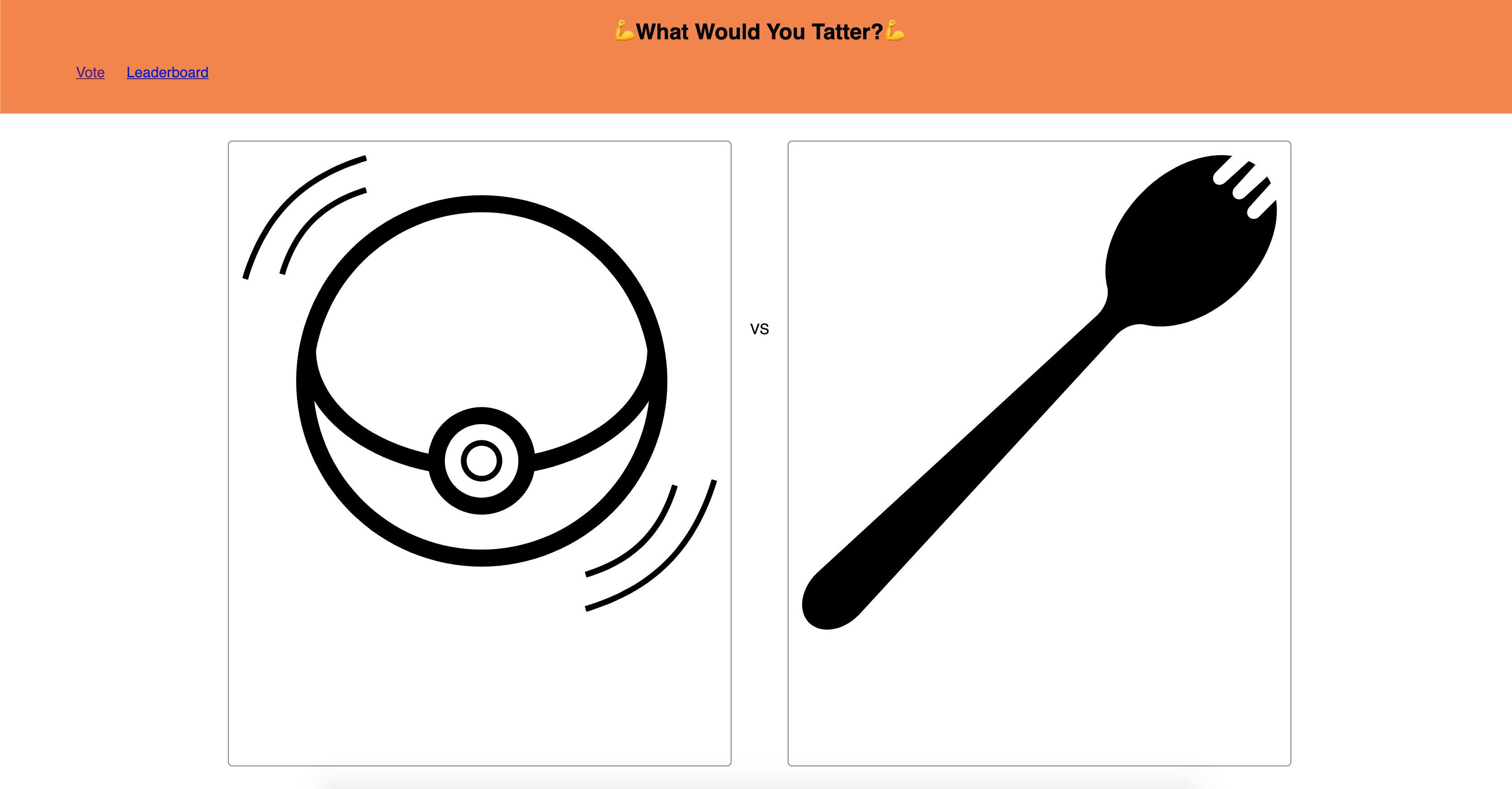

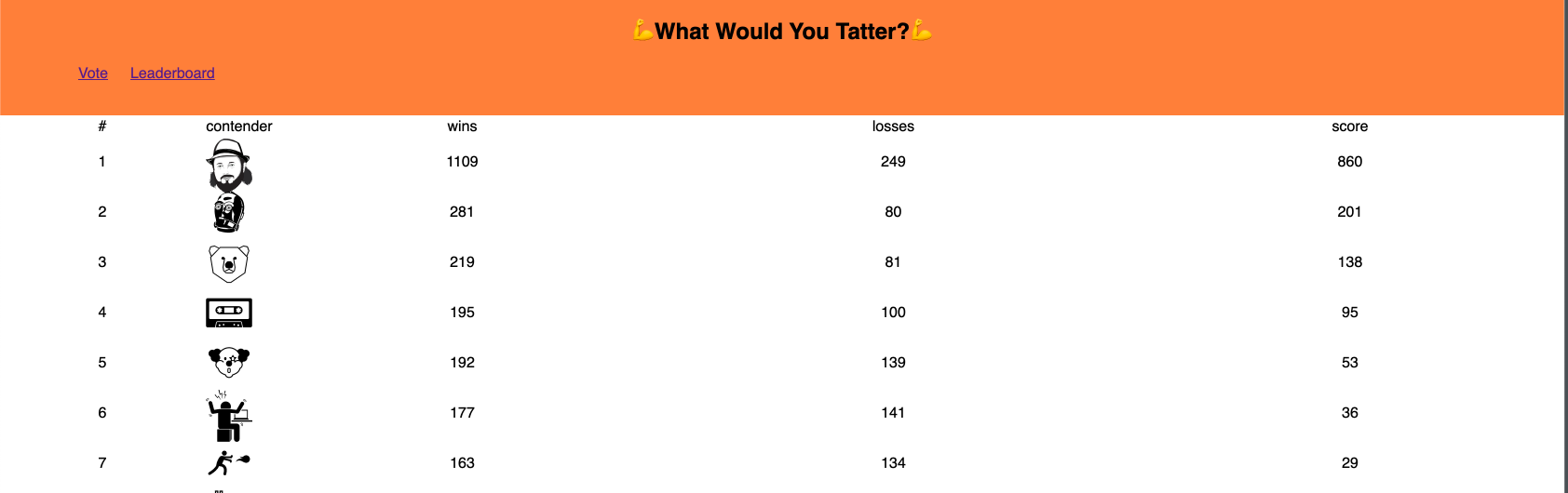

To that end, with the help of Alex Thomsen (you can see more of him on his

Github page) and the inimitable Josh Barratt, I put together a DynamoDB

backed API and frontend (100% the work of Alex) to vote on a series of head to head comparisons of SVGs to determine which ones

you’d rather tattoo on yourself, aptly, if unimaginatively, named wouldyoutatter. The source code can be found here, and you can still use a version of the website at wouldyoutatter.com.

This post isn’t really about that effort, however. It’s about the realization, brought upon by a remarkable talk, of how my hubris in my understanding of DynamoDB had let to a truly shockingly awful schema design. The talk I’m referring to is of course Ray Houlihan’s much lauded Advanced Design Patterns for DynamoDB. I realized that while I had about two years of experience designing and using DynamoDB tables, I didn’t have the faintest intuition as to how to effectively model non-relational data models. This realization obviously came too late for my pet project, but I’ve tried to leverage the understanding ever since. At the same time, I’ve had a nagging voice in the back of my mind telling me that I should revisit the design, and really put my shame out in the open.

The Old Schema

First off, let me apologize for the table formatting, I still need some practice with the NoSQL Workbench, since I wasn’t really able to produce a view with examples that was easily parseable. At any rate, below are the schemas for the various tables used in the app.

In terms of using the app, the main use case is for users to vote on different matchups:

This page uses cookies to determine what matchups a given user has seen before, and retrieves a matchup from the master set that is not currently in that user’s set. It also creates a TTL’d token valid for voting only on that specific combination, to try and limit gaming the system a bit (Narrator: “It still got gamed”). The user can either keep voting, or look at the leaderboard in the UI.

Finally, there’s also an API to query for the head to head record of two contenders. With this in mind, let’s look at the actual table design used originally.

Contenders Table

This is where the contender metadata gets stored, SVG included. The GSI is used retrieve the contenders in sorted order in a single call.

| Name (PK) | Leaderboard (GSI SK) | Score | Wins | SVG |

|---|---|---|---|---|

| bear | metadata | 10 | 12 | somegarblednonsense |

Matchups

Where the head to head statistics are recorded.

| Contender1 (PK) | Contender2 (SK) | Contender1Wins | Contender2Losses | Contender1Losses | Contender2Wins |

|---|---|---|---|---|---|

| books | c3po | 7 | 7 | 5 | 5 |

Possible Matchups

This is probably the lowest hanging fruit: a table with a single row, with every possible matchup combination.

| Primary Key | Attributes |

|---|---|

| master | { “bear§books”, “bear§c3po”,… } |

User Past Matchups

Containes the set of previously seen matchups for given users. The § is just an arbitrary delimiter

between contender names.

| Primary Key | Attributes |

|---|---|

| some-uuid | { “bear§books” } |

Tokens

Keeps track of the tokens, the matchup that they correspond to, and their expiry.

| TokenID | MatchupSet | TTL |

|---|---|---|

| ID | bear§books | ttl |

Why Change?

This design seems somewhat reasonably when looking at it from a more traditional relational database design perspective. However, like Houlihan calls out in his talks, this comes at a cost. When updating a contender’s record for example, we also need to update the matchup table. While non-global DynamoDB tables do support transactions like this, you have to pay additional read costs both before and after your writes. Moving to a single table design, we get to simply use Put and BatchPut operations on a single table, and we don’t have to worry about either using transactions, or rolling them ourselves.

There are additional savings to having a single table if you’re also provisioning for reads and writes, since you can simply right size one table, as opposed to having to right size multiple tables. Granted, I’d still typically lean for On Demand provisioning. To get into some more general upsides that aren’t as obvious by this example, I’d again recommend that you check out the talk

Evolving the Schema

Clearly, this design is a far cry from the idealized single-table per application design. So how can we redesign the schema leveraging the lessons from the talks? To start, we should enumerate the access patterns that we know about already:

- Get all possible matchups

- Get a contender, and their record

- Get a head-to-head record

- Get the current leaderboard

- Check a given token is valid for a given matchup

- Get the set of matchups a user has seen before

To make this exercise easier, we can group the access patterns together in logical groups

- Stats

- a) Get a contender, and their record

- b) Get a head-to-head record

- c) Get the current leaderboard

- Matchup Sets

- a) All possible matchups

- b) Matchups a user has seen

- Tokens

Let’s start with patterns 2 and 3, since they’re the most straightforward. It’s pretty clear that all matchups can be treated as a special case of a user’s previously seen matchups. To that end, we can use a “magic key” approach , e.g. instead of using some user’s UUID, using some special string.

| Primary Key | Sort Key | Matchups |

|---|---|---|

| user ID (UUID) | matchups | { “bear§books”, “bear§c3po”,… } |

| master-set | matchups | { “bear§books”, “bear§c3po”,… } |

As for token storage (access pattern #3), it doesn’t seem like much of a stretch to replace the value of the sort key

(matchups) with the specifically issued token (tokens are matchup-specific).

Amending our last example, we have:

| Primary Key | Sort Key | Matchups | Matchup | TTL |

|---|---|---|---|---|

| user ID (UUID) | matchups | { “bear§books”, “bear§c3po”,… } | - | - |

| user ID (UUID) | Token (UUID) | - | “bear§books” | TTL |

| master-set | matchups | { “bear§books”, “bear§c3po”,… } | - | - |

Since DynamoDB’s TTLs are on a per item basis we can set them on the tokens alone, without worrying about setting them on our indefinitely-lived rows. So we’ve now managed to satisfy half of our access patterns without even using a Global Secondary Index (GSI). If it’s not clear by now that I was doing it wrong the first time around… don’t worry, it will get worse as we get into modeling the stats that we need.

The first two stats-related query patterns (1a and 1b above) can clearly use the same trick we used for user matchups and tokens in our most recent example by using a special value for the sort key to indicate the overall contender record as opposed to the head to head records.

| Primary Key | Sort Key | Score | Wins | Losses | SVG | Contender1 Wins | Contender 2 Wins |

|---|---|---|---|---|---|---|---|

| bear | metadata | 10 | 12 | 2 | somegarblednonsense | - | - |

| books | c3po | - | - | - | - | 7 | 5 |

Something to note is that in this scenario, our attribute columns don’t match between the two entries. This was initially one of the most unintuitive things for me, and part of the reason why the original data schema had so many different tables. The key thing to note though, is that the types of our primary and sort keys are the same, and furthermore, this is where we can leverage the fact that DynamoDB is “schemaless”.

Now we have to address how we can get the leaderboard. As it stands, we could scan the table and get every “metadata”

value in the top score. This would be a costly operation however, and would only be exacerbated if combine this with

matchup/token table. What we can do though, is create our first GSI and leverage sparse indexes.

Since we have a limited number of contenders, we can simply add an attribute to our standard contender entries,

called leaderboard, and create a sparse index where the primary key is that attribute, and the sort key is the

overall record. These changes leave us with something like the table below (collapsing some of the columns into ... for the sake of readability):

| Primary Key | Sort Key | Score (GSI SK) | … | Contender1 Wins | Contender 2 Wins | Leaderboard |

|---|---|---|---|---|---|---|

| bear | metadata | 10 | … | - | - | true |

| books | c3po | - | - | 7 | 5 | - |

Putting it all together

We now know how to group our different query pattern groups into two distinct tables, but we can again leverage the fact

that the types of our primary and sort keys are in fact the same, and combine them into a single table. For the sake of

visual parsing, I’ll collapse row specific columns into a single * Attributes column, and only call out indices explicitly.

Row values containing ... mean the column is populated, while - means there are no values for that attribute set in that row.

| Primary Key | Sort Key | Score (GSI SK) | Contender Attributes | Matchup Attributes | MatchupSet | Token Attributes | Leaderboard (GSI PK) | TTL |

|---|---|---|---|---|---|---|---|---|

| bear | metadata | 12 | … | - | - | - | leaderboard | - |

| books | c3po | - | - | … | - | - | - | - |

| user ID (UUID) | matchups | - | - | - | { “bear§books”, “bear§c3po”,… } | - | - | - |

| user ID (UUID) | Token (UUID) | - | - | - | - | “bear§books” | - | TTL |

| master-set | matchups | - | - | - | { “bear§books”, “bear§c3po”,… } | - | - | - |

This new data schema seems strange when you’re still unfamiliar with single-table NoSQL designs. There’s inconsistency in attributes, primary and sort key values (but again, not the underlying types), and a sparse index to top it all off.

This discomfort comes from trying to understand the table as a logical subgrouping of an application, rather than a logical grouping of the entire application. Instead of looking at the data in the table, we can look at how we would access it.

Accessing the single table design

- Get a contender, and their record

- GetItem call, where the PK is the contender’s name, and the SK is

metadata

- GetItem call, where the PK is the contender’s name, and the SK is

- Get a head-to-head record

- GetItem call, where the PK is one of the contenders’ names, and the SK is the other’s

- Get the current leaderboard

- GetItem call, where the PK on the GSI is the attribute

leaderboard. The call should return sorted given the sort key

- GetItem call, where the PK on the GSI is the attribute

- All possible matchups

- GetItem call, where the PK is

master-setand the SK ismatchups

- GetItem call, where the PK is

- Matchups a user has seen

- GetItem call, where the PK is the user ID, and the SK is

matchups

- GetItem call, where the PK is the user ID, and the SK is

- Tokens

- GetItem call, where the PK is user ID, and the SK is the token itself.

- GetItem call, where the PK is user ID, and the SK is the token itself.

Not only do we have a single table on our hands now, but we also haven’t had to resort to costly measures like filter queries, nor have we had to add a significant amount of secondary indexes, which multiply the cost linearly on writes and reads.

Conclusion

While the data schema of this toy application is not particularly complex, I still would not have believed it possible (without an abundance of GSIs) to collapse the schema to a single table. Every DynamoDB schema I’ve designed at work since has followed this pattern and it becomes more natural with every use to intuit how the access patterns might be modeled. Hopefully the process of enumerating the access patterns of an app like this, logically grouping them, and comparing them, helps obviate how to approach NoSQL data modeling if you’re just starting out with it.

For more complex dives, aside from Ray Houlihan’s talk, I’d highly recommend DynamoDB’s own documentation , which has great examples of complex relational query access patterns modeled following the best practices detailed in the talk itself.